Moosfet's Synthesizer

Moosfet's Synthesizer is a software synthesizer for Linux that is designed to produce notes with as little latency as possible using a design that is immune to the problem of xruns.It is presently little more than a proof of concept. It'll make sounds and let you change some junk, but don't expect it to be useful for more than showing that this is indeed possible.

How does it work?

The design is so simple that, frankly, I have no idea why no one else has done it yet:

The synthesizer starts by allocating a large audio buffer, then does its best to keep the buffer completely full with the sound of all notes which are currently playing. When a new note-on event arrives, it rewinds its synthesis engine and overwrites the audio in the buffer with the new audio that includes that note. When a new note-off event arrives, it rewinds its synthesis engine and writes new audio that doesn't include that note. The same applies to pitch bends or any other MIDI events.

In the event of CPU latency, rewriting of the audio buffer is delayed, but as the buffer already contains audio, no xrun occurs. Instead you simply hear what you would have heard had those MIDI events not occurred. The result is that latency only affects new events, and only to the degree that it has to, e.g. if your system can do 1 ms latency 99% of the time, but once a second it has a 10 ms hiccup, then you can have 1 ms latency 99% of the time and only deal with 10 ms of latency during those hiccups, and only with respect to MIDI events that occur during that 10 ms.

While ALSA limitations prevent it from being the case, it's worth noting that it could be the case that MIDI events from a pre-recorded track in a sequencer are known by the synthesizer a second in advance, and thus are rendered into the audio buffer from the very beginning, and thus there's never any latency with any of them. In that case, momentary latency from CPU hiccups would affect only live notes from an external MIDI keyboard, which is as it should be since the sequencer knows about everything else in its entirety before the play button is even clicked. It's only poor design that makes latency such a pervasive issue. Latency and xruns should never be issues with pre-recorded tracks.

The synthesizer starts by allocating a large audio buffer, then does its best to keep the buffer completely full with the sound of all notes which are currently playing. When a new note-on event arrives, it rewinds its synthesis engine and overwrites the audio in the buffer with the new audio that includes that note. When a new note-off event arrives, it rewinds its synthesis engine and writes new audio that doesn't include that note. The same applies to pitch bends or any other MIDI events.

In the event of CPU latency, rewriting of the audio buffer is delayed, but as the buffer already contains audio, no xrun occurs. Instead you simply hear what you would have heard had those MIDI events not occurred. The result is that latency only affects new events, and only to the degree that it has to, e.g. if your system can do 1 ms latency 99% of the time, but once a second it has a 10 ms hiccup, then you can have 1 ms latency 99% of the time and only deal with 10 ms of latency during those hiccups, and only with respect to MIDI events that occur during that 10 ms.

While ALSA limitations prevent it from being the case, it's worth noting that it could be the case that MIDI events from a pre-recorded track in a sequencer are known by the synthesizer a second in advance, and thus are rendered into the audio buffer from the very beginning, and thus there's never any latency with any of them. In that case, momentary latency from CPU hiccups would affect only live notes from an external MIDI keyboard, which is as it should be since the sequencer knows about everything else in its entirety before the play button is even clicked. It's only poor design that makes latency such a pervasive issue. Latency and xruns should never be issues with pre-recorded tracks.

How do I get it?

Well, you'll have to compile it from source, which is easy, but it has a dependency for which your distribution may not have a package, and compiling that isn't so easy. ...but I'll try to make it as painless as possible.

First, if you're using Linux Mint 18 or newer, then your distribution does have that dependency, so just type this:

First, if you're using Linux Mint 18 or newer, then your distribution does have that dependency, so just type this:

sudo apt-get install libglfw3-dev

However, if you're using older version of Linux Mint, then you'll have to install GLFW 3 manually, like this:

First grab a copy of the source for GLFW 3 from www.glfw.org.

GLFW has a few dependencies you'll need to install before you can compile it, and my code has one additional dependency that you'll need to install. In Linux Mint, and I assume also in Ubuntu and Debian, you can install these dependencies with the following command:

Now you need to install the other dependencies of my code. To do that, just type this:

GLFW has a few dependencies you'll need to install before you can compile it, and my code has one additional dependency that you'll need to install. In Linux Mint, and I assume also in Ubuntu and Debian, you can install these dependencies with the following command:

sudo apt-get install cmake xorg-dev libgl1-mesa-dev

With that out of the way, you need to compile the GLFW shared library. To do that, run these three commands from within the GLFW source tree:

cmake -DBUILD_SHARED_LIBS=1 .

make

sudo make install

Now that the shared library is built and installed, you have to configure your system to find it. To do that, just type this command anywhere:

make

sudo make install

sudo ldconfig

sudo apt-get install xorg-dev libasound2-dev

Now you're finally to the point where you can compile my code!

wget http://www.ecstaticlyrics.com/music/synthesizer/moosynth-50.tgz

tar -xzf moosynth-50.tgz

cd moosynth-50

./compile

Assuming all goes well, you'll now have an executable named "moosynth" which you can execute like this:

tar -xzf moosynth-50.tgz

cd moosynth-50

./compile

./moosynth --device hw:0,0

I've tested these instructions in fresh installs of Linux Mint 17.2 64-bit Mate Edition, and Linux Mint 18 64-bit Mate Edition, so the process should work perfectly with them. As for anything else, well, good luck. You can contact me for help if you need to, and indeed it wouldn't be a bad idea for me to know of potential issues so that these instructions can be better, but try not to drown me with questions that you'll have figured out the answer to before I respond. Also note that my familiarity with other distributions is virtually non-existent.

How do I use it?

The first step is to select the correct ALSA device to use it with. Because it works by overwriting previously-committed audio in the audio buffer, it won't work with every ALSA device name. It needs one that provides direct access to the DMA buffer, otherwise the real-time synthesis won't work. Unfortunately ALSA doesn't provide any means to determine which device names provide direct DMA access. Indeed, I'm not entirely sure that any of them do, as it could just be that with some of them it reads from the buffer as soon as possible, and with others it waits until the last possible moment. Anyway, if you choose the wrong one, you'll suffer from latency and the output may also be noisy. If this happens, try a different ALSA device name.

I've tested it on three systems and four sound cards so far, and so far "hw:0,0" has always been a good choice. In half of the cases even "default" worked fine. So start out by running this command:

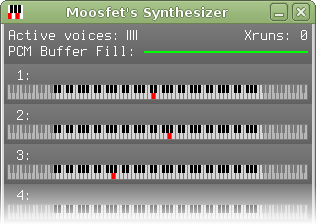

To make sound, you need to connect something to it which is able to send it MIDI events. (Those piano keyboards in the GUI are informational-only, you can't click on them to play notes.) For example, to play a MIDI file, first find the port number using the aconnect command, like this:

From this we see that the Client ID of the synthesizer is 128. So to play a MIDI through it, just type something like this:

As for how to use the synthesizer itself, at present it doesn't do a whole lot. If you can't figure out how to do something (e.g. save the instruments you create) it's because it doesn't do that yet. It's a work in progress.

The sole exception to that is that it does have some support for percussion. Create a directory in ~/.moosynth named samples, and within it place files named like drum_36_48000.raw where 36 is the MIDI note number, and 48000 is the sample rate of the stereo 16-bit signed-integer raw PCM data contained within the file. Make files for each sample rate you might want to use. In other words, sox drum.wav -t raw -r 48000 -c 2 -b 16 -e signed-integer ~/.moosynth/samples/drum_36_48000.raw, at least until next week when SOX changes its command line syntax again.

I've tested it on three systems and four sound cards so far, and so far "hw:0,0" has always been a good choice. In half of the cases even "default" worked fine. So start out by running this command:

./moosynth --device hw:0,0

If that doesn't work out, just run the same command without anything after "--device" and it will print out a list of valid device names on your system. Then you can try them one-by-one until you find one that works.

To make sound, you need to connect something to it which is able to send it MIDI events. (Those piano keyboards in the GUI are informational-only, you can't click on them to play notes.) For example, to play a MIDI file, first find the port number using the aconnect command, like this:

aconnect -io

This will produce output that looks like this:

client 0: 'System' [type=kernel]

0 'Timer '

1 'Announce '

client 14: 'Midi Through' [type=kernel]

0 'Midi Through Port-0'

client 16: 'SB Live! 5.1' [type=kernel]

0 'EMU10K1 MPU-401 (UART)'

client 17: 'Emu10k1 WaveTable' [type=kernel]

0 'Emu10k1 Port 0 '

1 'Emu10k1 Port 1 '

2 'Emu10k1 Port 2 '

3 'Emu10k1 Port 3 '

client 24: 'USB Keystation 88es' [type=kernel]

0 'USB Keystation 88es MIDI 1'

client 128: 'Moosfet's Synthesizer' [type=user]

0 'MIDI Input '

client 129: 'nosefart' [type=user]

0 'cool stuff '

aplaymidi --port 128:0 whatever.mid

MIDI channel 10 has been muted, as the synthesizer presently doesn't produce drum sounds.

As for how to use the synthesizer itself, at present it doesn't do a whole lot. If you can't figure out how to do something (e.g. save the instruments you create) it's because it doesn't do that yet. It's a work in progress.

The sole exception to that is that it does have some support for percussion. Create a directory in ~/.moosynth named samples, and within it place files named like drum_36_48000.raw where 36 is the MIDI note number, and 48000 is the sample rate of the stereo 16-bit signed-integer raw PCM data contained within the file. Make files for each sample rate you might want to use. In other words, sox drum.wav -t raw -r 48000 -c 2 -b 16 -e signed-integer ~/.moosynth/samples/drum_36_48000.raw, at least until next week when SOX changes its command line syntax again.

What if it doesn't work?

I'd kind of like to hear about it, so let me know. AFAIK, there aren't any bugs in it, just a lot of unimplemented features.

I'm presently aware of only two potential problems:

The first is that if "enable software compositing window manager" is enabled in Control Panel -> Windows, dragging the synthesizer's windows around or dragging any other application's windows over any of the synthesizer's windows will cause extreme lag. I don't know what the issue is there. I note that the same happens with half of the GLFW test programs, but not with glxgears. So I assume it's some issue with GLFW, but as I haven't a clue what the point of the "software compositing window manager" is (enabling it doesn't seem to do anything other than cause this problem) I haven't bothered to look for a solution.

The second is that, if running with --realtime and with a low buffer size, such that it decides to never sleep within the synthesis thread, MIDI events from an external keyboard are occasionally held up within ALSA for about ten seconds. This seems to be an ALSA problem, as it has nothing to do with my synthesizer, it happens even without my synthesizer running and with the real-time program being a dummy test program that doesn't utilize ALSA at all. Thankfully, using --realtime isn't necessary, so you can just not use it or use a larger buffer size and avoid the problem.

As for other things you might try before contacting me, I did add a few command line options to make some stuff configurable. Just use "--help" to see what they are. In particular, if it just isn't possible to get direct DMA access with any of your audio devices (which is quite possible if your audio devices are USB devices) then you can use a command line like this:

./moosynth --device hw:0,0 --buffer 256 --latency --realtime # use on multi-core systems only

This instructs it to behave like a "normal" low-latency synthesizer, utilizing a small buffer size, never rewinding the synthesis engine, and using real-time scheduling to attempt to keep up with the audio output. You can't do this on a single-core system since, with buffer sizes of less than 10 ms, the synthesis thread never calls sleep(), since in my experience a sleep of 1 ms is far too likely to take up to 10 ms, even with real-time scheduling enabled.

Also, for better or worse (probably worse), I added Jack support to it. Just sudo apt-get install libjack-jackd2-dev, the recompile it with ./compile jack and run it with ./moosynth --jack and hope for the best. Over the last ten years, I've seen exactly one system where Jack works correctly, and I'm not even sure of that since Jack does occasionally work well, when the phase of the moon is correct and the planets are aligned and stuff like that, so it may have just been that system's lucky day. On every other occasion I've found it to be an xrun-inducing monster which at best, with hours of tweaking, can be made barely-usable and kept in that state for a few more hours before a new problem develops. So I don't recommend using it, but if you're lucky enough to have one of the rare systems where Jack actually performs well, then you might as well use it I guess. Note, however, that using Jack defeats the minimal-latency aspect of my synthesizer, as Jack provides no means to rewind the audio buffer. Thus it's as if you're always running with the --latency option.

I'm presently aware of only two potential problems:

The first is that if "enable software compositing window manager" is enabled in Control Panel -> Windows, dragging the synthesizer's windows around or dragging any other application's windows over any of the synthesizer's windows will cause extreme lag. I don't know what the issue is there. I note that the same happens with half of the GLFW test programs, but not with glxgears. So I assume it's some issue with GLFW, but as I haven't a clue what the point of the "software compositing window manager" is (enabling it doesn't seem to do anything other than cause this problem) I haven't bothered to look for a solution.

The second is that, if running with --realtime and with a low buffer size, such that it decides to never sleep within the synthesis thread, MIDI events from an external keyboard are occasionally held up within ALSA for about ten seconds. This seems to be an ALSA problem, as it has nothing to do with my synthesizer, it happens even without my synthesizer running and with the real-time program being a dummy test program that doesn't utilize ALSA at all. Thankfully, using --realtime isn't necessary, so you can just not use it or use a larger buffer size and avoid the problem.

As for other things you might try before contacting me, I did add a few command line options to make some stuff configurable. Just use "--help" to see what they are. In particular, if it just isn't possible to get direct DMA access with any of your audio devices (which is quite possible if your audio devices are USB devices) then you can use a command line like this:

./moosynth --device hw:0,0 --buffer 256 --latency --realtime # use on multi-core systems only

This instructs it to behave like a "normal" low-latency synthesizer, utilizing a small buffer size, never rewinding the synthesis engine, and using real-time scheduling to attempt to keep up with the audio output. You can't do this on a single-core system since, with buffer sizes of less than 10 ms, the synthesis thread never calls sleep(), since in my experience a sleep of 1 ms is far too likely to take up to 10 ms, even with real-time scheduling enabled.

Also, for better or worse (probably worse), I added Jack support to it. Just sudo apt-get install libjack-jackd2-dev, the recompile it with ./compile jack and run it with ./moosynth --jack and hope for the best. Over the last ten years, I've seen exactly one system where Jack works correctly, and I'm not even sure of that since Jack does occasionally work well, when the phase of the moon is correct and the planets are aligned and stuff like that, so it may have just been that system's lucky day. On every other occasion I've found it to be an xrun-inducing monster which at best, with hours of tweaking, can be made barely-usable and kept in that state for a few more hours before a new problem develops. So I don't recommend using it, but if you're lucky enough to have one of the rare systems where Jack actually performs well, then you might as well use it I guess. Note, however, that using Jack defeats the minimal-latency aspect of my synthesizer, as Jack provides no means to rewind the audio buffer. Thus it's as if you're always running with the --latency option.

When will it be updated?

Fuck if I know, but probably never.

The best synthesizers aren't going to be parametric. The best synthesizers will be custom code for every instrument. To that end, what is needed is something like Jack that allows many connections from many synthesizers, but which implements this minimal latency approach by allowing the connected synthesizers to re-send audio that they've already sent.

So if I continue to work on this at all, it'll probably take the form of a daemon to do just that, and at that point I'll probably just write small programs that create specific sounds I want, rather than create a parametric synthesizer with lots of controls to play with.

However, it's unlikely that I'll even do that. The simple fact is that the design of everything is wrong in my opinion, and so to get what I want, not only do I need to create my own synthesizers and my own Jack-like connection daemon, but I also need to create my own sequencer. All together, it's a lot of work, my sleep disorder only allows for about ten days of productivity per year, and this project isn't my highest priority. So unless someone else wants to pick up where I've left off, latency issues will continue to be pervasive in Linux audio.

The best synthesizers aren't going to be parametric. The best synthesizers will be custom code for every instrument. To that end, what is needed is something like Jack that allows many connections from many synthesizers, but which implements this minimal latency approach by allowing the connected synthesizers to re-send audio that they've already sent.

So if I continue to work on this at all, it'll probably take the form of a daemon to do just that, and at that point I'll probably just write small programs that create specific sounds I want, rather than create a parametric synthesizer with lots of controls to play with.

However, it's unlikely that I'll even do that. The simple fact is that the design of everything is wrong in my opinion, and so to get what I want, not only do I need to create my own synthesizers and my own Jack-like connection daemon, but I also need to create my own sequencer. All together, it's a lot of work, my sleep disorder only allows for about ten days of productivity per year, and this project isn't my highest priority. So unless someone else wants to pick up where I've left off, latency issues will continue to be pervasive in Linux audio.